Blog Post

Algorithmic management is the past, not the future of work

Algorithmic management is the twenty-first century’s scientific management. Job quality measures should be included explicitly in health and safety risk assessments for workplace artificial-intelligence systems.

AI in the workplace: what’s at stake?

The use of artificial intelligence in the workplace has been hailed as both the future of work and its destruction. Worker-friendly applications of AI in the workplace include the automation of dangerous, dirty and dull tasks, strategic workforce planning and learning and reskilling. However, applications that might harm rather than help workers are also emerging. AI algorithms in hiring and promotion have been shown to discriminate, for example. Equally worrying for workers is algorithmic management (AM).

AM is the use of AI to assign tasks and monitor workers. It includes continuous tracking of workers, constant performance evaluation and the automatic implementation of decisions, without human intervention. These algorithms are designed to optimise the efficient allocation of resources in the production of goods and services, helping organisations reduce costs, maximise profits and ensure competitiveness in the market.

However, optimising efficiency can come at the expense of worker wellbeing. Deteriorating job quality is often a side effect of scheduling and allocation algorithms. In warehouses, robots are not yet replacing workers, but algorithms are optimising jobs to make workers more like robots and to minimise workers’ idle time (to the point that they skip bathroom breaks). In retail, scheduling algorithms present workers with long and unpredictable hours, making it next to impossible to balance personal life with work. No longer confined to digital labour platforms, AM is now pervasive throughout the whole economy, particularly in retail, call centres, hospitals and warehouses.

None of this is new however. We need not look far to find evidence of the harmful effects of such optimisation practices. Frederick Taylor’s Principles of Scientific Management, written in 1911, reads like a twenty-first century guide to data-driven management: data collection and process analysis, efficiency and standardisation, and knowledge transfer from workers into tools, processes and documentation. The digital transformation that organisations have gone through in the past decades has left them with mountains of data and cheap technology for storing and analysing that data. With the rise of workplace AI, Taylor’s dream of perfectly optimised work processes might finally become a reality.

However, that would come with a price. The Ford factories adhering to Taylor’s principles had a staff turnover rate of over 350% (meaning the entire staff had to be replaced 3.5 times per year). It is not hard to see that job quality was extremely bad on the Ford assembly lines.

There is a large body of evidence on the effect of job autonomy on workers’ wellbeing and health. Jobs with low autonomy or control have been shown to lead to negative health outcomes and mental strain. Acute conditions, cardiovascular risk, musculoskeletal disorders, mental health problems, functional disabilities and self-assessed health problems are also associated with low-autonomy jobs among older workers. Data on observable health outcomes confirms the self-assessed effects: coronary heart disease and even cardiovascular mortality have been found to be impacted by job control over time. These long-term effects on workers’ health could be felt years after they have been exposed to low-autonomy jobs.

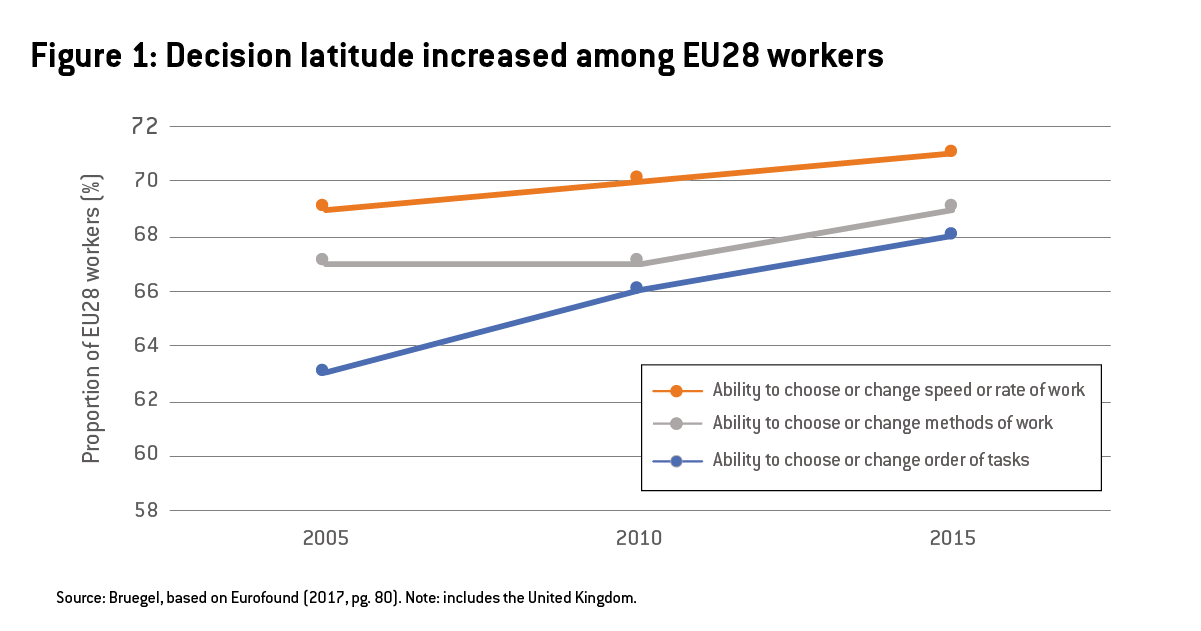

Indicators of job quality developed by Eurofound (the European Foundation for the Improvement of Living and Working Conditions) include the ability to change the order of tasks, the ability to choose or change the speed or rate of work and the ability to change or choose methods of work. All three measures increased in Europe between 2005 and 2015 (Figure 1). Given AI’s specific impact on automating exactly those types of decisions, there is a real risk that these forms of workplace AI could reverse that evolution and set worker wellbeing back 100 years.

AI regulation versus labour regulation

Some protection for European Union workers against excessive AI-based optimisation might come from European Commission proposals on ensuring trustworthy AI, published on 21 April. The Commission’s goal is to guarantee the health, safety and fundamental rights of people and businesses, while promoting AI adoption and innovation.

The proposal identifies eight areas of AI application considered high risk for health and safety. Rightly, the commission includes among these the use of AI in employment and workers’ management. The Commission specifically mentions algorithms for assigning people to jobs (recruitment, selection, promotion and termination) and algorithms for scheduling and productivity (task allocation, monitoring and evaluation). According to the Commission, these systems “may appreciably impact future career prospects and livelihoods of workers” by “perpetuating historical patterns of discrimination”, and violating “rights to data protection and privacy”.

[1] Specifically on the potential discriminatory effects of AI, another relevant body of legislation deals with tackling discrimination at work.

However, as we have shown, concern about AI in the workplace should extend beyond career prospects and livelihoods into job quality and worker wellbeing. Besides AI regulation, EU workplace regulation could help mitigate the health risks associated with low job control stemming from algorithmic management. At European level, two main bodies of legislation are relevant in this context: labour law (covering working conditions such as working hours, part-time work and posting of workers, as well as informing and consulting workers about collective redundancies and transfers of companies) and the Occupational Safety and Health (OSH) Framework Directive (89/391) (creating a legal obligation for employers to protect their workers by avoiding, evaluating and combating risks to their safety and health)[1].

But neither body of legislation seems geared for the large-scale impact and fine-tuned precision of workplace AI systems, because employers have been found to use AI in ways that erode labour laws. Law professors Alexander and Tippett call this “the hacking of employment law”, describing practices in which employers use software to “implement systems that are largely consistent with existing laws but violate legal rules on the margin”.

However, the main legislative shortcoming related to the undermining of workers’ autonomy (and long-term health) is that the specification of workplace risks or criteria for assessing them are left too vague. While the European Agency for Safety and Health at Work’s (OSHA) practical guide addresses psychosocial factors, the OSH directive doesn’t mention any specific risks. The terms ‘stress’ and ‘psychosocial risks’ are not mentioned explicitly in most of the legislation, leading to a lack of clarity or consensus on the terminology used. This leaves room for employers to pick and choose which risks to consider, let alone how to measure, address and mitigate them. The Commission’s proposed AI regulation also leaves the definition of risks insufficiently specified.

Shortcomings and suggestions

Under the Commission’s proposal, since employment and workers’ management is included in the eight high-risk areas, workplace AI systems would be subject to strict obligations before they can be put on the market, including requirements for risk assessments and mitigation systems, data quality checks to minimise the risk of discrimination, logging of activity to ensure traceability, and transparency measures including detailed documentation and user information.

This is insufficient to protect workers adequately. Workplace AI systems will only be subject to risk assessments carried out by the employer or provider of the AI system. To strengthen worker protection, social partners could be given a role in overseeing AI systems at work. Workers opposing the outputs of high-risk AI systems could be given protection against disciplinary measures imposed by employers. Indeed, worker participation in the implementation and assessment of AI could partially mitigate the psychosocial risks of autonomy-reducing AI systems.

But besides the issue of who should assess the risks of workplace AI systems, there is the issue of which risks should be included in the mandatory assessment. The Commission’s proposed AI regulation lists requirements for risk-management systems for high-risk AI systems in Article 9, with as a first step “identification and analysis of the known and foreseeable risks associated with each high-risk AI system” (our emphasis). The emphasis throughout the proposal on safety, health and human rights leaves the interpretation of these “‘known and foreseeable risks” too broad, with too much room for picking some risks over others.

While employers will consider obvious immediate safety risks (for example, the risk that a robot accidentally hurts a worker with its robotic arm), they might not equally consider the long-term health risks associated with taking away workers’ autonomy. Given the link between job quality and health, job control measures are a more responsive indicator to assess whether an AI system poses a risk to workers’ wellbeing in the long run. Job quality (and autonomy in particular) should therefore be explicitly included as a measure in the risk assessment of workplace AI, and processes should be put in place to mitigate any residual impact of AI on job quality and worker wellbeing.

The need for more tools and guidance on psychosocial risk management is clear, but in order to be binding the best place to address this risk definition is in the OSH legislation itself. The proposed AI regulation could then refer to psychosocial risks as defined in OSH legislation to be included in the required risk assessment and mitigation systems for the high-risk area of employment and workers’ management.

Eurofound’s job-control indicators – the ability to choose the order of tasks, the speed of work and the methods of work – provide a starting point for developing measures for psychosocial risk assessment. Given AI’s specific impact on automating exactly those types of decisions, it is important to understand how different forms of autonomy relate to wellbeing at work. Not all autonomy is the same and different aspects of job control have different effects on wellbeing. Current research suggests that scheduling autonomy (choosing the order of tasks) could be stress-reducing, while learning autonomy (experimenting with methods of work) could be motivating. Only by understanding the distinctive impact of different types of autonomy on stress and engagement at work can the risks of AI for worker wellbeing be correctly assessed and mitigated. In an increasingly digital world of work, careful job design matters more than ever.

Recommended citation:

Nurski, L. (2021) ‘Algorithmic management is the past, not the future of work’, Bruegel Blog, 6 May

This blog was produced within the project “Future of Work and Inclusive Growth in Europe“, with the financial support of the Mastercard Center for Inclusive Growth.

Republishing and referencing

Bruegel considers itself a public good and takes no institutional standpoint. Anyone is free to republish and/or quote this post without prior consent. Please provide a full reference, clearly stating Bruegel and the relevant author as the source, and include a prominent hyperlink to the original post.